Background and introduction

I’m currently working on extracting metrics from multiple Fronius Symo inverters. There are essentially two approaches to solve this problem:

- Connect the inverter with the Internet and upload everything to SolarWeb, a proprietary metrics platform hosted by Fronius. Use the Fronius SolarWeb mobile app to view the data.

- Operate the device offline and collect the metrics yourself. Fronius offers a JSON based API to query realtime and archive data from an inverter. Furthermore, the device also offers a push service, where the inverter can upload its metrics continuously to a FTP server or send it to a HTTP endpoint. Both methods can be used without in Internet connection.

As you might have guessed, I implemented the second approach where each inverter pushes its metrics continuously to a server on the local network. From there, it is picked up and imported into InfluxDB. Grafana is used to visualize the metrics. Please contact me, if you are interested in how to get collection, transfer to InfluxDB and visualization up- and running for Fronius inverters.

Lost date and time

After a few days of metrics collection, I noticed that one of the inverters regularly loses its local date and time. Getting timeseries data with a timestamp of 2000-01-01T05:02:15 is not very helpful.

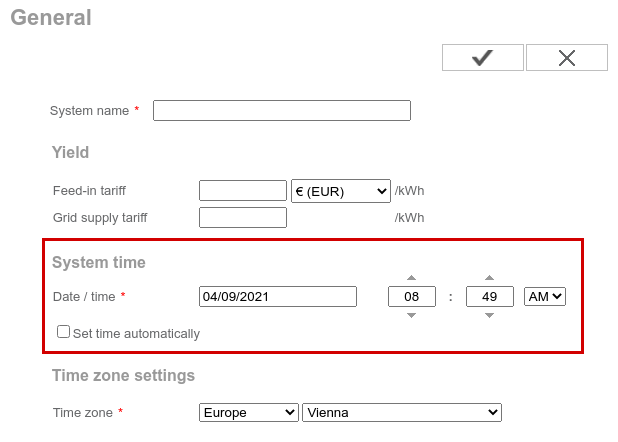

The inverter’s webinterface offers to set the system time and also has a checkbox for “Set time automatically”.

Great, simply enable automatic time synchronization and allow outgoing NTP traffic (and DNS). As it turns out, the inverter does not use NTP to synchronize its system time. It requires the user to enable the metrics upload to SolarWeb in order to synchronize its system time!

I did not want to re-implement parts of the proprietary, UDP based protocol (PCAP dumps are available upon request), so I decided to check the API docs for endpoints related to date and time settings. Unfortunately, I could not find such an endpoint.

The next approach was to inspect the requests of the web browser and rebuild the necessary requests in curl. Put those requests in a script and invoke it regularly. The steps are easy:

- Authenticate with the device (only HTTP Digest auth is supported)

- Set date and time

As it turns out, the HTTP Digest implementation does not conform to the relevant RFC 7235. From section 4.1:

A server generating a 401 (Unauthorized) response MUST send a WWW-Authenticate header field containing at least one challenge. A server MAY generate a WWW-Authenticate header field in other response messages to indicate that supplying credentials (or different credentials) might affect the response.

The datalogger does not respond with a WWW-Authenticate header, but

instead sends a X-WWW-Authenticate header, which breaks the

authentication workflow in curl. As there is no way to override the

expected HTTP header in curl, I decided to implement (the broken)

Fronius HTTP Digest authentication myself in Python. The workaround is

easy, subclass the HTTPDigestAuth class from

Requests

and fixup the header name before Requests reads the header value.

from requests.auth import HTTPDigestAuth

class HTTPDigestAuthFronius(HTTPDigestAuth):

def handle_401(self, r, **kwargs):

# Replace www-authenticate unconditionally

r.headers["www-authenticate"] = r.headers.get("x-www-authenticate")

return super().handle_401(r, **kwargs)

The entire script is available here (tested on Python 3.9 with Requests 2.25).

Conclusion

Please use open standards and established protocols for common tasks such as NTP for keeping the system time in sync. Furthermore, don’t mess with the standards, just implement/use them as-is and test them with standard tools such as curl.